AI in Digital Art

How far is AI art advanced?

Image Generation is becoming the latest technological advancement in the AI industry. Google, OpenAI, and Stability AI, have publicly released the next level of a text-to-image generator one after another.

Text-to-image generation

The idea is simple, write down a specific description you want in the picture, and platforms allow you to get a bunch of AI-generated images in seconds. Depending on the input keywords and the reference images, you will endlessly have different tastes in the results.

For example, Stable Diffusion, developed by Stability AI, is one of the newest text-to-image algorithms that can create more realistic and detailed images.

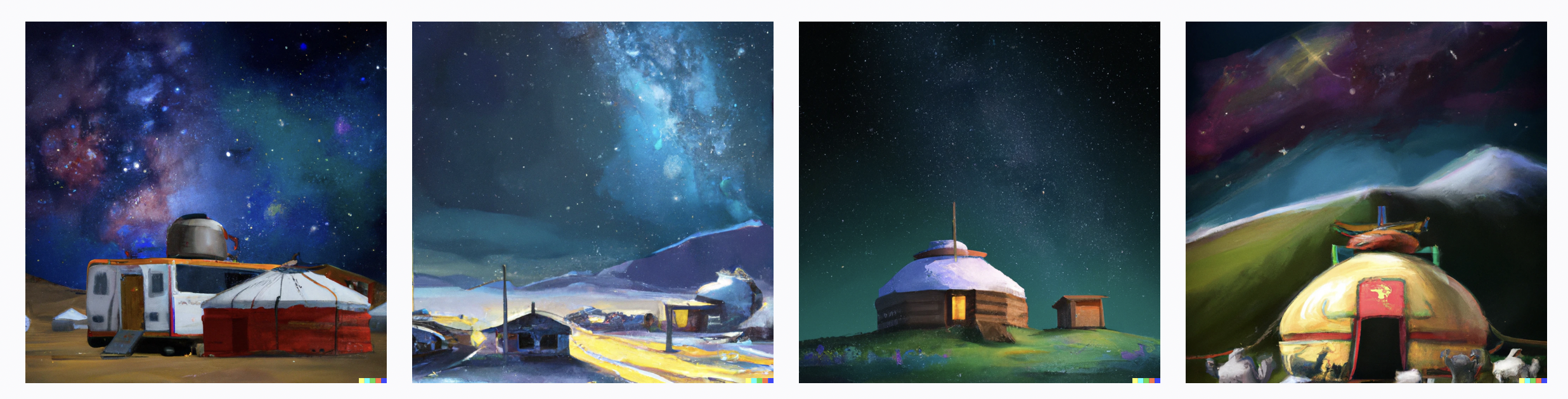

Here is what we got on Dall-E 2 for the following description: “Mongolian Starry Night, digital art.” Even if we input the same description a few times, Dall-E generates different images each time.

Here is what we got on Stable Diffusion for the following description: “Ulaanbaatar city in 2050, digital art.”

How are these algorithms work?

To understand how the text-to-image algorithms work, we should know two concepts.

The first is called GANs, or Generative Adversarial Networks, which incorporate the primary functionality of text-to-image generators that turn a line of text into a unique image. GAN consists of two networks, and the networks are involved in the process: one, the Generator, which has to understand and complete the assignment, and the other, the Discriminator, has to rate the result and determine whether it looks realistic enough for human use.

The second is called Transformer. A transformer is a neural network architecture using self-attention, a mechanism for differentially weighting the significance of each part of the input data. Transformers had an impact on Machine Learning.

What makes it impactful is semi-supervised learning, which means when training the model, it needs less supervision. Initially, the Transformer is used primarily in natural language processing (NLP), but recently it marks good results in image generation. Specifically, Generative Pre-trained Transformer (GPT) models are mainly used in text-to-image.

How are these algorithms differ from each other?

Stable Diffusion separates the image-generating process into a diffusion process at the runtime. The diffusion process starts with the noise, then gradually improves the quality of the image until there is no more noise, bringing it closer to a provided text description. On the other hand, Dall-E 2 uses a 12-billion parameter training version of the GPT-3 transformer model to interpret the text description and generate corresponding images.