Use case of Generative AI - Text2Image

📌 How to generate well-known people portraits using Stable Diffusion

Who is this article for?

This article is for those who possess a basic understanding of Text2Image and are interested in further customization of text2image models. We show you how to generate images of well-known people using a Text2Image model called Stable Diffusion.

Those who read this article are supposed to:

- know how to use pip

- have already installed python

- have already created your pip env

What is Stable Diffusion?

Stable Diffusion introduced by Stability AI, now available to the public, represents a significant advancement in AI image generation. Developed in collaboration with HuggingFace and CoreWeave, this cutting-edge technology offers a permissive Creative ML OpenRAIL-M license, allowing for both commercial and non-commercial use. It emphasizes ethical and legal utilization, with users bearing the responsibility of adhering to the license terms. To ensure user satisfaction, Stable Diffusion incorporates an AI-based Safety Classifier that filters out undesired outputs, enabling better control over generated images. Community input is highly valued to enhance the performance of this feature and improve the model's ability to accurately represent desired outcomes.

The release of Stable Diffusion is the result of extensive collaboration and tireless efforts to compress humanity's visual knowledge into a few gigabytes. The creators emphasize the importance of using this technology in an ethical, moral, and legal manner, while actively contributing to the community and engaging in constructive discussions. Additional features and API access, such as local GPU support, animation, and logic-based multi-stage workflows, will be activated soon. Partnerships and programs will also be announced to support users and expand the technology's reach.

The recommended model weights for Stable Diffusion are v1.4 470k, building upon the v1.3 440k model initially provided to researchers. The expected VRAM usage upon release is 6.9 Gb. In the future, optimized versions of the model will be released, along with compatibility updates for AMD, Macbook M1/M2, and other chipsets, while NVIDIA chips are currently recommended. These developments aim to enhance performance, image quality, and accessibility. Stable Diffusion represents a significant step forward in AI image generation and has the potential to transform the way we communicate. The creators are committed to continuously improving and expanding the technology, ensuring a brighter, more creative future for all.

What makes Stable Diffusion so outperformed?

Stable Diffusion is one of the well-known DMs. DMs refer to diffusion models, which are a type of generative model used in image synthesis and other computer vision tasks. These models are constructed using a hierarchy of denoising autoencoders. Diffusion models have shown impressive results in image synthesis and are considered state-of-the-art in class-conditional image synthesis and super-resolution tasks.

Unlike other types of generative models, such as GANs, diffusion models are likelihood-based models. They do not suffer from issues like mode-collapse and training instabilities often encountered in GANs. Additionally, diffusion models can handle complex distributions and generate diverse outputs without requiring an excessively large number of parameters. This parameter efficiency sets them apart from autoregressive (AR) models, which typically involve billions of parameters for the high-resolution synthesis of natural scenes.

Diffusion models have demonstrated their effectiveness in various image synthesis tasks, including inpainting, colorization, and stroke-based synthesis. They offer a promising alternative to GANs and AR models, combining the benefits of likelihood-based modeling, stability, and computational efficiency.

The main commitment of the Stable Diffusion team is to improve the training and sampling efficiency of denoising diffusion models (DMs) without compromising their quality. They aim to address the limitations of DMs, which typically operate directly in pixel space and require extensive computational resources for optimization and inference. To achieve this, they propose using latent diffusion models (LDMs) that leverage the latent space of pre-trained autoencoders. This approach allows them to strike a near-optimal balance between complexity reduction and detail preservation, resulting in improved visual fidelity.

Furthermore, the team introduces cross-attention layers into the model architecture, enabling DMs to serve as powerful and flexible generators for various conditioning inputs, such as text or bounding boxes. They demonstrate the effectiveness of LDMs through experiments, achieving state-of-the-art results in tasks such as image inpainting, class-conditional image synthesis, text-to-image synthesis, unconditional image generation, and super-resolution. Notably, the proposed LDMs significantly reduce computational requirements compared to pixel-based DMs, further enhancing their practical applicability. They emphasize that their approach offers favorable results across a wide range of conditional image synthesis tasks, eliminating the need for task-specific architectures.

Preparing python environment

# install torch, transformers, and diffusers

$ pip install torch

$ pip install transformers

$ pip install diffusers==0.11.1

Image generation using Stable Diffusion

import torch

from diffusers import StableDiffusionPipeline

my_pipe = StableDiffusionPipeline.from_pretrained(

"stabilityai/stable-diffusion-2-1-base", torch_dtype=torch.float16

)

my_pipe = my_pipe.to("cuda")

my_prompt = "Please draw a portrait of Barack Obama, the 44th president of the United States, with a focus on capturing his distinctive facial features and serious yet charismatic expression."

generated_image = my_pipe(my_prompt).images[0]

my_prompt = "Draw a portrait of Neil Armstrong in his NASA astronaut uniform with a serious expression on his face, holding a helmet under his arm."

generated_image = my_pipe(my_prompt).images[0]

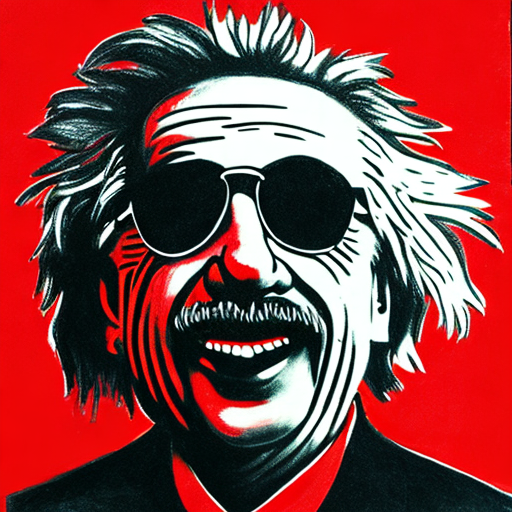

my_prompt = "Draw a portrait of Albert Einstein wearing sunglasses on his excited face."

generated_image = my_pipe(my_prompt).images[0]

Summary

This article presents an example of how we can utilize Text2Image to generate well-known people portraits with the help of Stable Diffusion.

The Stable Diffusion, known for its excellent performance, has attained impressive quality by enhancing the effectiveness of learning and sampling in denoising diffusion models. As we expected, Stable Diffusion generated high-quality images for well-known people.